How To Stop Confidential Data Leakage From Internal GenAI Apps: A 2025 Enterprise Playbook

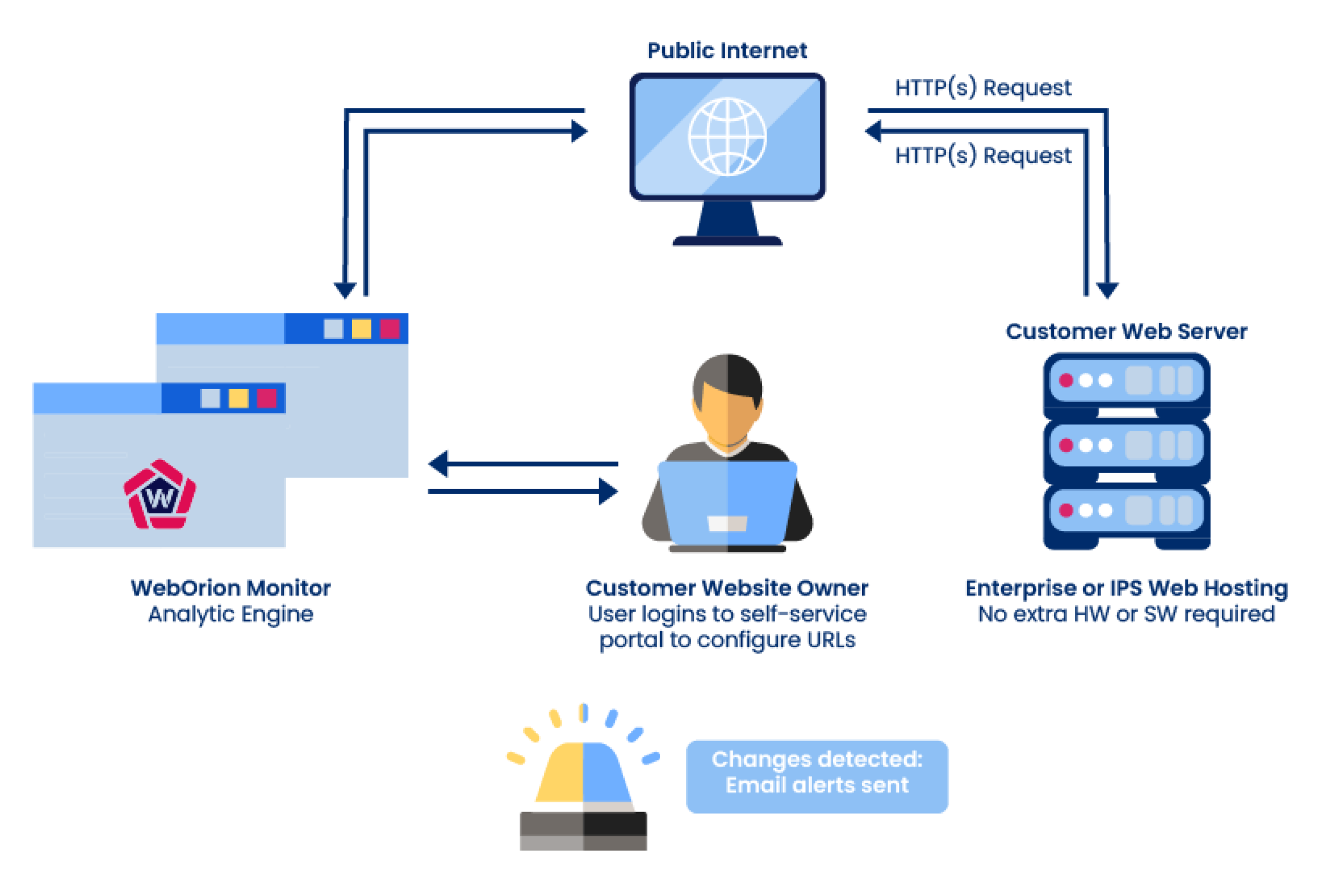

Stop confidential GenAI leakage by tightening day-one controls (SSO, provisioning, retention), enforcing real-time prompt and output redaction at the boundary with GenAI Protector Plus, locking down RAG sources and integrations, routing confidential collaboration into CoSpaceGPT, and using WebOrion® Monitor to catch unauthorised public-site changes quickly, all within a practical 90-day rollout.