Generative AI is transforming how enterprises operate, from automating customer service to accelerating software development. But alongside this opportunity comes unprecedented security risk. In fact, according to IBM’s 2023 X-Force Threat Intelligence Index report, AI-related security incidents spiked by 26 % in 2023 alone, and half of security leaders now rank generative AI governance as a top concern. High-profile cases of confidential data leaks via ChatGPT and even outright bans of certain AI tools by governments underscore the stakes. For CIOs, CISOs and IT teams, the challenge is clear: How do we safely harness GenAI’s power in the enterprise without exposing ourselves to new threats?

The answer is a new class of solutions called generative AI security platforms for enterprises, which are designed to mitigate these risks. This definitive guide breaks down the essential capabilities to look for, common gaps in today’s offerings, and how to evaluate a GenAI security platform that will truly protect your organisation’s data and reputation. By the end, you’ll understand what an enterprise-grade GenAI security solution should provide and why a unified approach is vital to staying secure and compliant while innovating with AI.

The New Risks Generative AI Brings to Enterprises

Adopting GenAI at scale introduces a broader attack surface and unique failure modes that traditional security tools were never built to handle. Unlike conventional software, large language models can be manipulated through inputs in ways that produce unintended or malicious outputs. They also rely on vast data (often including sensitive information) which can be mishandled if not properly controlled. Key risks include:

Sensitive Data Exposure: Employees may inadvertently feed proprietary code, customer data or trade secrets into public AI tools. Those inputs could be stored or even regurgitated to other users. GenAI apps can inadvertently expose sensitive company data including intellectual property, financial records and customer information, leading to serious privacy and compliance risks. With no safeguards, a single careless prompt could leak crown-jewel data.

Prompt Injection & Manipulation: Bad actors can craft inputs that hijack an AI model’s behaviour. A prompt injection attack inserts malicious instructions or queries that trick the model into revealing confidential info or producing harmful actions. For example, an attacker might prompt an internal chatbot to ignore its safety rules or output unauthorised data. These prompt-level exploits are a novel threat unique to GenAI and they require dedicated detection and defence mechanisms.

“Shadow AI” Usage: Much like shadow IT, employees may use unsanctioned AI tools or plugins without security approval. An engineer might paste code into a random coding assistant or a marketer might use an unvetted AI copywriter service. This shadow AI bypasses enterprise oversight and opens the door to data leaks and third-party vulnerabilities. Without visibility and governance, companies can’t protect what they don’t even know is in use.

Unsafe or Non-Compliant Outputs: GenAI models can generate toxic, biased or insecure content if misused. This ranges from AI-written code with security flaws to offensive or misleading text that could violate regulations. Adopting a zero-trust stance on AI output is critical because organisations must moderate and filter model responses for things like hate speech, personal data or even hidden malware links. Failing to do so can result in regulatory penalties or reputational damage if harmful AI-generated content reaches employees or customers.

Evolving Attack Techniques: Cybercriminals are already adapting attacks to GenAI. For instance, malware or phishing schemes might be embedded in AI-generated content, and models themselves could be poisoned with malicious training data. Traditional defences often lag behind these AI-driven threats, which makes GenAI systems harder to monitor, govern and defend with legacy tools. Enterprises need security solutions that evolve as fast as the threat landscape around AI does.

Key Features to Look For in a Generative AI Security Platform

When evaluating GenAI security platforms for enterprise use, CIOs and CISOs should insist on a comprehensive set of capabilities. The goal is to cover the full lifecycle of generative AI usage, from controlling inputs to monitoring outputs, within a unified governance framework. Here are the essential features and safeguards a robust GenAI security platform should provide:

1. Data Leakage Prevention and Privacy Controls

Preventing unintended data exposure is priority number one. The platform should act as a data-loss-prevention (DLP) layer for all AI interactions. This includes:

Input Scrubbing and Redaction: Automatically removing or anonymising sensitive information in prompts before they ever reach the AI model. For example, if an employee tries to paste a client’s personal data or source code into ChatGPT, the system should detect it and redact or block the transfer. Some solutions achieve this through built-in prompt sanitisation and PII redaction filters.

Policy-Based Data Controls: Administrators must be able to define what categories of data are never allowed to be shared with GenAI, aligning with regulations (GDPR, HIPAA, etc.) and internal policies. The platform should then enforce those policies in real time. For instance, it could block any prompt containing financial identifiers or customer PII and alert the user or security team.

Secure Handling of AI Outputs: Just as inputs can leak data, model outputs might inadvertently contain sensitive information, especially if the model was trained on internal data or has context memory. A strong platform will scan outputs to ensure they do not include things like internal file paths, API keys or confidential text fragments. Any such occurrences should be logged and sanitised.

Data Residency and Sovereignty: Enterprises in regulated industries or regions often worry where their prompts and AI-generated data are stored. An enterprise-grade solution should offer options to keep data within certain geographic boundaries or your cloud environment. Some platforms even serve as a proxy, ensuring queries to external AI services are encrypted and not retained, thereby preventing vendor-side data exposure.

PII and Confidential Information Detection: Just as we do not want users sending secrets in, we do not want the AI spitting secrets out. Effective output moderation will flag if an AI response contains what looks like private data or company-confidential information. For example, if an internal GenAI tool responds with a snippet of real customer data or internal server names, the platform can redact that or stop the response, since it could indicate either a prompt leak or an overly permissive training set.

In essence, the platform needs to stop data leaks before they happen and it should not rely on employee diligence alone. By intercepting and inspecting prompts and responses, it should enforce an “AI usage policy” that keeps sensitive information inside the company walls. Given the high cost of data breaches (averaging £4.45 million in 2023) and the trust at stake, this capability is absolutely non-negotiable.

2. Prompt-Level Threat Detection and Defence

Generative AI introduces a new category of application-layer attacks via malicious or cleverly crafted prompts. Security solutions must therefore operate at the prompt level, analysing and mediating what goes into and comes out of the model:

Prompt Injection Protection: The platform should recognise known patterns of prompt injection and block or neutralise them. This can include detecting phrases that attempt to override system instructions (e.g. “ignore previous instructions…”) or SQL command patterns in user input that could target underlying systems. Modern GenAI firewalls deploy multi-layered defences ranging from protecting hidden system prompts to using contextual guardrails that spot when a user prompt seems crafted to manipulate the AI.

Threat Intelligence on AI Attacks: New prompt-based attacks and jailbreak techniques emerge constantly. Look for solutions that are tied into up-to-date threat feeds or databases of LLM attack patterns. For example, some vendors crawl the internet for the latest GenAI threats and use human reviewers to label new exploits, feeding this into their detection engines. This ensures the platform can catch novel attack vectors rather than only known ones.

Real-Time Prompt Scoring: Each prompt could be evaluated for risk in real time, similar to how a WAF (web application firewall) scores web requests. High-risk prompts, such as those attempting privilege escalation or data exfiltration, should be blocked or require additional validation. Lower-risk but suspicious prompts might trigger an alert or “Are you sure?” confirmation. The key is an intelligent middle layer that does not unduly hinder normal use but is ready to intercept dangerous queries.

Jailbreak and Abuse Detection: Beyond outright attacks, users may attempt to misuse AI (knowingly or not) by making it produce disallowed content. The security platform should be able to detect attempts at jailbreaks (prompts that try to bypass safety filters) and other policy violations. For instance, if a user asks an AI to generate hate speech or illicit instructions, the system should intervene either by preventing the query or catching the unsafe output.

Prompt-level defence is a defining feature of GenAI security platforms, setting them apart from traditional cyber-security tools. Whereas legacy firewalls cannot interpret AI queries or responses, a GenAI-aware solution acts as a specialised gatekeeper for your AI models and integrations. According to industry experts, prompt injection and data leakage are the two key risks to address in this domain, so any enterprise solution must excel at identifying and defusing malicious prompts in real time.

3. Access Governance and Policy Enforcement

Controlling who can use generative AI, and how they use it, is an essential aspect of enterprise AI security. This goes beyond basic user authentication; it is about embedding GenAI into your access governance and IT policy framework:

User Access Controls: Integration with SSO/identity systems so that only authorised employees or roles can access certain AI tools or internal LLM applications. For example, you may decide only R&D and Support teams can use a particular code-generation AI, or that interns are barred from using any external AI service. The platform should enforce these entitlements and log who is using what.

Shadow AI Detection: As discussed, one of the biggest risks is unsanctioned AI usage. A strong solution will automatically discover GenAI applications being used in the environment, much like a CASB discovers cloud-app usage. With an up-to-date registry of AI services (ChatGPT, Bard, Claude, Copilot and even niche apps), the platform can flag when someone tries an unapproved tool. Leading platforms boast libraries of thousands of known GenAI apps and can identify usage patterns in network traffic or OAuth logs.

Granular Policy Controls: It is not all-or-nothing. You will want nuance in how AI is adopted. Look for the ability to classify AI apps as sanctioned, tolerated or banned and enforce different rules accordingly. For sanctioned apps, perhaps allow use but with DLP scans; for tolerated ones, allow only read-only queries (no data uploads); and outright block unsanctioned ones. Additionally, fine-grained controls can govern specific actions. For instance, blocking file uploads to an AI chatbot or limiting the amount of data a user can submit in one prompt.

Usage Auditing and Coaching: A good platform does not just play bad cop; it can guide users towards safer behaviour. Some solutions will notify users in real time if they are about to do something against policy (like paste a client email into an AI writer), effectively coaching them on proper AI use. All user activity with GenAI – prompts submitted, results received – should be logged in audit trails. This auditability is crucial for compliance and incident investigation, providing full visibility into how AI is being used across the enterprise.

In short, access-governance features ensure that GenAI use remains within your organisation’s risk appetite and compliance boundaries. They provide the oversight needed to prevent the Wild-West scenario of employees using any AI tool freely. By integrating with your existing identity and SaaS-security systems, a GenAI security platform extends corporate governance to this new frontier of AI applications.

4. Output Moderation and Response Control

Filtering and controlling the outputs of generative AI is just as important as governing inputs. Even with safe prompts, an advanced AI model might produce content that is disallowed, sensitive or simply incorrect in a dangerous way. Thus, enterprise solutions should provide output-moderation capabilities:

Toxic and Unsafe Content Filtering: The platform should automatically scan AI outputs for inappropriate or harmful material, similar to how email filters scan for profanity or DLP violations. This includes detecting toxic language, hate speech, harassment or bias in text outputs, and either blocking such responses or masking them out before they reach the end-user. It should also catch any content that could indicate self-harm or violence if your usage context requires that level of safety monitoring.

Regulatory and Compliance Checks: Depending on your industry, certain AI-generated content might pose compliance issues (imagine an AI financial adviser making an unvetted recommendation, or a healthcare AI giving medical advice). Solutions can implement rule-based or AI-driven checks on outputs to ensure they do not break industry regulations or internal approval workflows. In high-stakes cases, you might require that some AI outputs be reviewed by a human before action is taken – a feature known as human-in-the-loop output approval.

Hallucination and Error Prevention: GenAI models are notorious for sometimes generating false but confident-sounding information (the “hallucination” problem). While no tool can magically guarantee factual accuracy, a good platform can mitigate this. Techniques include cross-checking certain answers against trusted data sources, or at least flagging content that looks like a potential hallucination (e.g. the AI is citing a source that does not exist). For mission-critical use, the platform might enforce that only outputs with a high confidence score or that pass validation rules are delivered, and everything else gets escalated or labelled as unverified.

Response Throttling or Transformation: In some cases, moderation might involve editing the AI’s answer rather than blocking it entirely. For instance, if an AI’s response is good except for one sensitive detail, the platform could mask that detail and allow the rest. Or if an AI tries to output a large chunk of data (which could be a data dump), the platform might truncate the response and alert an admin for review.

By placing a safety net over AI outputs, enterprises ensure that what the AI says or generates will not create new liabilities. It is about catching unacceptable or risky content post-generation, before it causes damage. As one guide on AI safety put it, you should treat model outputs as untrusted and “wrap your model’s output in filters that catch problems” before they reach users. In practice, this output-moderation layer is indispensable for maintaining trust in AI-driven processes and staying compliant with content standards.

5. Integration with SOC Workflows and Enterprise Systems

Finally, any GenAI security platform aimed at the enterprise market must plug into your existing security and IT ecosystem. It should not be a black box on the side; rather, it becomes another source of critical insights for your security operations and a controllable part of your infrastructure:

SIEM/SOAR Integration: All relevant events – blocked prompts, flagged outputs, user policy violations, detected attacks – should be logged and exportable to your Security Information and Event Management (SIEM) system in real time. This allows your SOC analysts to get alerts when, say, someone attempted a prompt injection, just as they would for an attempted malware download. Some platforms provide out-of-the-box connectors for popular SIEM and SOAR tools so that AI-security incidents fit seamlessly into your incident-response workflows.

Dashboards and Reporting: In addition to raw logs, a centralised dashboard for GenAI usage and threats is valuable. The solution should offer reporting on things like number of prompts scanned, how many were blocked, which users are the top AI users, what types of content are being filtered out, etc. This gives leadership a clear view of AI adoption and risk trends. Enterprises will want the ability to customise reports, especially to demonstrate compliance with any AI policies or regulatory requirements.

API and DevOps Hooks: If your developers are building AI into your own applications, the security platform should provide APIs, SDKs or other hooks to embed protection directly in those apps. For example, an internal AI-powered app could call the platform’s API to screen each prompt and response. This ensures even custom-built solutions benefit from the centralised security controls. Likewise, integration with CI/CD pipelines or model-development workflows (to scan models for vulnerabilities before deployment) could be a consideration if you train your own models.

Collaboration and Ticketing Integration: When an AI-related security event occurs (e.g. an employee tried to enter restricted data), how does your team follow up? Look for features like automatic ticket creation in systems such as ServiceNow or JIRA for policy violations, or integrations that notify via Slack or Teams. Bridging that gap between the GenAI-security layer and your operational workflows will speed up response and remediation.

Scalability and Cloud Integration: As a SaaS-based platform, it should integrate with your cloud environment and scale effortlessly as usage grows. Multi-cloud and hybrid support might be important if your AI tools span on-prem and cloud. Ensure the platform supports the AI services you use (AWS, Azure OpenAI, Google PaLM, etc.) and can be deployed in regions aligned with your data-residency needs.

The bottom line is that a GenAI-security solution must be enterprise-ready and not a standalone gadget. It should feed into your existing defences and processes, amplifying them with AI-specific visibility. When done right, adopting such a platform can even improve your overall security posture by illuminating how data is used and shared in new AI workflows. You gain unified oversight. Your SOC can manage AI risk just as well as any other cyber risk, using the same playbooks and tools, now informed with GenAI-specific telemetry.

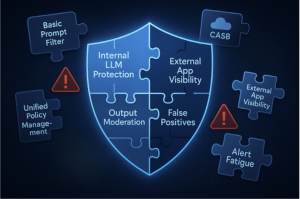

Where Current GenAI Security Solutions Fall Short

With the rapid rise of generative AI, a flurry of security tools has hit the market. Many address individual pieces of the puzzle, but few provide the comprehensive coverage enterprises truly need. It’s important to be aware of the common gaps and limitations in current offerings so you can avoid one-dimensional solutions:

Point Solution Overload: Most tools today tackle a narrow slice of GenAI risk. For example, one product might do AI model scanning for vulnerabilities, while another focuses only on prompt filtering or redacting sensitive data. On their own, each may work well, but they don’t solve the whole problem. Companies adopting point solutions end up with a patchwork, using one vendor for AI model governance, another for DLP, and a third for monitoring prompts. This results in complexity, higher costs, and potential blind spots between tools.

Lack of Unified Policy Management: Siloed tools mean siloed policies. An enterprise could find it cumbersome to ensure that the same data-classification rules or user-access policies are enforced across all AI touchpoints. Ideally, you want a single pane of glass to define and apply your GenAI usage policies universally. Unfortunately, many current solutions bolt AI controls onto existing products like a cloud proxy or an endpoint agent without offering a holistic policy engine for AI. The result is inconsistent enforcement and difficult administration.

Limited Scope (Internal or External, not Both): Some offerings are designed only for monitoring usage of third-party AI SaaS apps, acting as an AI-aware CASB, and they won’t protect your own custom AI applications. Others are built to wrap around an internal LLM app but provide no visibility into employees using external tools. This either-or approach is a major gap. Enterprises really need coverage for both scenarios, securing any generative AI app the business builds and any AI service employees consume. Solutions that address one and not the other leave half your attack surface unguarded.

Minimal Output Control: A number of tools emphasise input filtering and monitoring but do very little with outputs aside from maybe virus-scanning file downloads. Stopping bad prompts is crucial, but it’s not sufficient. Without robust output moderation, as described earlier, an AI security solution might let toxic or sensitive content slip through to end-users or external channels, which can be just as damaging. This is an area where ‘AI safety’ overlaps with security, and many products in the security market haven’t fully incorporated AI safety mechanisms like toxicity filters or hallucination detection.

Poor Integration and Alert Fatigue: Some early solutions function almost as black boxes or separate consoles that don’t integrate well with existing SOC workflows. They might lack the ability to export logs or send detailed alerts, forcing security teams to swivel-chair between tools. Others might generate too many false positives or low-value alerts if not finely tuned to an enterprise environment, leading to alert fatigue. A GenAI security tool that isn’t enterprise-friendly in deployment and operations can end up unused, or worse, give a false sense of security while genuine issues go unnoticed in the noise.

Staying Updated: The threat landscape in AI is evolving rapidly, with new jailbreaks and new model exploits, yet not all vendors have the resources to keep up. We see a gap in many offerings around continuous updates and improvement of their AI threat detection. Enterprise buyers should ask: does the solution learn from new incidents? Is it backed by a dedicated research team or threat-intel feed for AI? Security tools often lag behind, which makes AI systems harder to monitor and defend. Choosing a provider that’s laser-focused on GenAI and not treating it as a side feature can make a big difference in staying ahead of emerging risks.

How to Evaluate GenAI Security Solutions: A Checklist for CIOs and CISOs

Choosing the right generative AI security platform for your enterprise involves asking the right questions and digging into the details. Below is a checklist of key considerations and criteria to guide your evaluation. Use this as a framework when comparing vendors or auditing an in-house approach:

Data Protection and Compliance: Does the solution prevent data leaks effectively? Look for strong DLP features tailored to AI, including input scrubbing, output filtering, and support for your compliance needs such as GDPR, HIPAA, or data residency. Ask for demo scenarios of the tool catching sensitive data in prompts and blocking it. Verify how encryption and data storage are handled for any AI interactions the platform mediates.

Prompt Threat Detection: How does the platform defend against prompt-based attacks? Enquire about techniques for prompt injection prevention and whether the vendor maintains an updated library of known attack patterns or performs AI red-teaming. The solution should demonstrate real-time analysis of prompts with the ability to block or alter malicious ones without breaking legitimate functionality. Consider running a few tricky prompt examples to see if it catches them.

Access and Usage Governance: Can the platform discover and control all GenAI usage in your organisation? Ensure it covers both sanctioned and unsanctioned AI apps with discovery capabilities. Check that it integrates with SSO or identity providers for user-based policies. You’ll want to easily enforce who can use what AI service, when, and how much. Also evaluate the ease of policy configuration. It should have a straightforward interface to set rules like ‘Block uploads of file types X to AI service Y for all Finance users.’

Output Moderation and Safety: What safeguards are in place for AI outputs? The vendor should highlight mechanisms for filtering toxic or disallowed content and preventing unsafe actions suggested by AI. If your use cases involve code generation, ask how it handles potentially dangerous code output. Does it sanitise scripts or provide execution sandboxing? Essentially, verify that the platform doesn’t just trust the AI’s output blindly. It should treat it as another input to be vetted.

Integration with Enterprise Systems: Assess how well the solution will fit into your existing stack. Does it have out-of-the-box integration with your SIEM for centralised logging and alerting? Can it send alerts to your incident-response or ticketing system with useful context? Check supported deployment models such as purely SaaS, an on-premises option, or cloud-specific integrations to ensure it aligns with your IT strategy. A pilot or proof-of-concept can be illuminating here. See how easily your team can work the tool into daily operations.

Comprehensive Coverage: Map the solution’s features against all the areas we covered in this guide. Is anything missing? If a vendor only talks about one aspect such as monitoring user prompts and has little to say about output control or model-level security, that’s a red flag regarding comprehensiveness. The best platforms will be multi-layered and unified, not requiring multiple add-ons to address different use cases. They should protect both your internally developed AI solutions and employees using external AI tools under one umbrella.

Vendor Expertise and Roadmap: Finally, consider the provider’s focus and reputation in AI security. This field is fast-moving, and you want a partner who is investing heavily in keeping the platform up to date, such as through dedicated research, frequent updates, or participation in AI security communities. Ask about their roadmap, including upcoming features, support for new AI models or integrations, and more. A transparent, visionary roadmap is a good sign. Also seek customer references or case studies, especially for enterprises in your industry, to gauge real-world efficacy.

WebOrion® Protector Plus: A Unified GenAI Security Platform for Enterprises

When it comes to checking all the boxes above, CloudsineAI stands out by delivering a unified, comprehensive GenAI security platform purpose-built for enterprise needs. We have leveraged our deep background in web security with the WebOrion® suite to tackle generative AI threats head-on. The result is an all-in-one GenAI firewall solution that addresses the full spectrum of risks, from prompt injection to data leakage, while integrating smoothly into enterprise environments. Here’s how CloudsineAI meets the critical needs we’ve discussed:

Multi-Layered Prompt and Output Defence: Our platform provides context-aware guardrails around your AI models that intercept malicious prompts and scrub harmful outputs. With our patented ShieldPrompt™ technology, we layer protections such as system prompt isolation, dynamic input validation and content moderation filters to neutralise prompt injection attacks in real time. This means even advanced jailbreak attempts or hidden threats are blocked before they can cause damage.

Data Leak Prevention Built-In: Protecting sensitive information is one of the core strengths of our firewall. Our platform automatically detects and blocks any attempt to feed confidential data into proprietary GenAI applications using advanced PII detection and context-based rules.

Enterprise-Grade Policy and Integration: We’ve built WebOrion® Protector Plus with enterprise workflows in mind. Our policy engine is intuitive and allows your CISOs or risk officers to define rules based on your organisation’s specific needs.

Conclusion: Secure Your GenAI Future Today

Generative AI is poised to be a game-changer for enterprises, but it must be adopted responsibly. As we have explored in this guide, securing GenAI goes beyond traditional cyber-security. It requires new layers of protection and a holistic strategy. Enterprise leaders evaluating GenAI security solutions should demand a platform that protects data, thwarts prompt-based attacks, governs usage, filters outputs and integrates with existing operations. Anything less leaves too much to chance in a landscape where threats evolve as fast as technology.

The good news is that innovative solutions such as CloudsineAI are rising to meet this challenge, enabling organisations to embrace AI with confidence. By implementing a comprehensive GenAI security platform, you can unlock AI’s immense benefits safely, maintaining trust with customers and stakeholders while staying compliant with regulations.

Do not let security concerns hold back your AI innovation. Take action now to put the proper safeguards in place. If you are ready to protect your generative-AI initiatives with enterprise-grade security, contact CloudsineAI for a personalised demo or consultation. Our experts will show you how to deploy GenAI in your organisation securely and at scale, so you can drive innovation. Secure your GenAI future today.