Artificial Intelligence has woven itself into the daily workings of modern businesses, sparking a wave of efficiency and innovation, unlike anything we’ve seen before. AI-driven applications are shaking up entire industries, whether it’s customer-service chatbots that actually grasp the subtleties of human conversation or automated systems making sense of complex decisions behind the scenes. But with this rapid embrace of AI has come a tangled web of new security challenges—many of them more complex than anything we’ve dealt with before. Most cybersecurity frameworks were built with old-school software and networks in mind, so they often struggle to keep up with the unusual risks that come with AI. Bringing AI into the mix dramatically broadens the attack surface, adding new vulnerabilities tied to how models are trained, how they make decisions, and how massive amounts of data are handled.

In particular, Large Language Models (LLMs) and generative AI have opened up entirely new opportunities for bad actors to exploit. Hackers have found clever ways to mess with these advanced AI systems—sometimes by slipping in sneaky prompts (what’s called prompt injection), other times by feeding them tainted data during training, a tactic known as data poisoning. When attackers tamper with these systems, the fallout can be serious—think confidential data leaking out, malicious code running unchecked, or false information spreading far and wide. As the need to secure AI deployments grows ever more urgent, both regulators and industry leaders are putting greater pressure on organisations to show they have strong AI governance and risk management in place. Following established security frameworks isn’t just about doing the right thing anymore—it’s quickly becoming essential for staying ahead of the competition and keeping up with ever-changing regulations.

Making Sense of the Risks: A Closer Look at Essential AI Security Frameworks

OWASP Top 10 for LLM Applications 2025: What Every Developer Needs to Know About the Biggest Security Risks

The Open Web Application Security Project, or OWASP, has built a worldwide reputation as a leading voice in web application security. Seeing just how tricky LLMs can be from a security standpoint, OWASP rolled out its Top 10 for LLM Applications—a list that, with the 2025 edition, captures the newest threats and up-to-date industry perspectives. Think of this list as a hands-on guide for developers—it shines a spotlight on the most pressing vulnerabilities found in LLM-powered systems, from sneaky prompt manipulation all the way to the risk of runaway resource usage. It gives security teams hands-on ways to tackle these risks, along with clear checklists to help keep everything in check.

One of the most pressing vulnerabilities highlighted in the OWASP Top 10 for LLM Applications 2025 is Prompt Injection (LLM-01). This vulnerability, which remains the highest-ranked risk, occurs when unsanitised user inputs manipulate an LLM’s behaviour or outputs. Because it’s so easy to exploit—and the fallout can spread so far—this issue sits right at the top of the priority list. To guard against these threats, it’s wise to thoroughly check and filter all user inputs to keep out harmful prompts, use structured prompting methods that make it harder for attackers to slip in rogue instructions, and set up role-based access controls so only the right people have certain permissions.

Unbounded Consumption is yet another major risk worth noting. Abuse of an LLM’s API usage limits can lead to denial of service for legitimate users or result in unexpectedly high operational costs. To keep things running smoothly, you’ll want to set limits on how many requests users can make, put caps in place with user quotas, and use dynamic resource management to avoid overload. It’s also smart to add timeouts and throttle back any operations that start hogging too many resources.

On top of that, there’s the risk of system prompt leakage and accidental exposure of sensitive information LLM02 (sometimes listed as LLM-06) could spell trouble. If sandboxing isn’t set up properly or logging gets out of hand, there’s a real risk that internal prompts, sensitive system commands, or even confidential information could leak out. The solution? Organisations need to get serious about secure prompt management, put strict output filters in place, sanitise data thoroughly, and make sure strong access controls are enforced at every step.

Other critical vulnerabilities highlighted by OWASP include Insecure Output Handling, Overreliance on Untrusted Output, Insufficient Monitoring and Logging, Server-Side Request Forgery (SSRF), Denial of Service (DoS), and Supply Chain Vulnerabilities.¹ Since its release, the OWASP Top 10 for LLM Applications has been widely adopted by organisations across various sectors as a foundational resource for conducting red-teaming exercises, performing security audits, and developing training programmes for security practitioners, thereby accelerating the overall maturity of AI security practices on a global scale.

The OWASP Top 10 for LLM Applications provides a continuously evolving, practical, and developer-focused perspective on securing LLM applications. Because it’s shaped by its community and frequently refreshed, it stays in step with the latest threats and what people in the industry are actually seeing. By zeroing in on real-world weaknesses and providing practical ways to address them, it gives developers and security teams the tools they need to make LLM-powered systems far more secure.

Table: OWASP Top 5 LLM Vulnerabilities 2025 and Key Mitigation Strategies

| Vulnerability | Description | Key Mitigation Strategies |

|---|---|---|

| Prompt Injection | Manipulation of LLM behaviour through crafted inputs. | Input validation, structured prompting, role-based access control. |

| Unbounded Consumption | Abuse of API limits leading to DoS or high costs. | Rate limiting, resource quotas, cost monitoring. |

| System Prompt Leakage & Sensitive Information Disclosure | Exposure of internal prompts or confidential data. | Secure prompt management, output filtering, access controls. |

| Insecure Output Handling | Failure to properly validate and sanitise LLM output. | Output validation, encoding, content security policies. |

MITRE ATLAS: Charting How Attackers Target AI Systems

While OWASP offers a developer-centric view of vulnerabilities, MITRE ATLAS (Adversarial Threat Landscape for Artificial-Intelligence Systems) provides an adversary-centric perspective. This framework is an ATT&CK-style matrix that catalogues real-world adversarial tactics, techniques, and case studies against AI systems, gathered from red-team exercises, academic research, and incident reports. ATLAS organises threats across the different stages of the AI lifecycle, including pre-training, training, inference, and post-inference. At every stage, attackers have particular objectives in mind—maybe they’re after higher-level access, trying to siphon off confidential data, or looking to swipe proprietary AI models.

With this organised framework in hand, security teams can zero in on the kinds of adversarial tactics most likely to target their own AI systems. What’s more, ATLAS makes it possible for organisations to put their defences to the test by simulating these attack techniques with tools like CALDERA and Counterfit. When security teams know exactly how attackers might strike, they can put the right defences in place—rigorous input checks, thorough data integrity safeguards, and advanced systems that catch unusual activity—making it much harder for threats to slip through the cracks.

Examples of adversarial tactics catalogued in MITRE ATLAS include Reconnaissance, where attackers gather information about the AI system; Resource Development, focused on preparing the necessary tools and infrastructure; Initial Access, detailing how attackers gain entry; ML Model Access, targeting the AI model itself; Execution, involving the running of malicious code; Persistence, ensuring continued access; Privilege Escalation, aiming for higher-level permissions; Defence Evasion, to avoid detection; Credential Access, for stealing authentication information; Discovery, to learn about the AI environment; Collection, of relevant artefacts; ML Attack Staging, to tailor the attack; Exfiltration, of data; and Impact, the ultimate goal of the attack.¹⁶ Techniques under these tactics include Prompt Injection (AML.T0051), Data Poisoning, Model Inversion, Model Theft, and Denial of Service.³ Organisations utilise MITRE ATLAS in various use cases, including threat modelling to anticipate and prioritise potential adversarial scenarios, purple teaming to guide collaborative red and blue team exercises, and control validation to evaluate the effectiveness of AI-specific security controls.

MITRE ATLAS takes you inside the mind of an attacker, offering a structured way to look at AI threats from the adversary’s perspective—a perspective that’s absolutely crucial if you want to build defences that stay a step ahead. Because it’s organised like an ATT&CK matrix, security teams can start thinking like attackers themselves—mapping out likely tactics, anticipating where the next move or weak spot might be. Breaking threats down according to each stage of the AI lifecycle makes it much easier to grasp where attacks might strike and how serious their consequences could be.

Table: Example MITRE ATLAS Tactics and Techniques for AI Systems

| Tactic | Technique | Description |

|---|---|---|

| Reconnaissance | Search for Victim’s Publicly Available Research Materials | Adversaries gather information about the target AI system from publicly available sources. |

| Initial Access | LLM Prompt Injection | Adversaries use crafted prompts to manipulate the behaviour of large language models. |

| ML Model Access | ML-Enabled Product or Service | Adversaries interact with an AI system through its intended interface to gather information or launch attacks. |

| Execution | Command and Scripting Interpreter | Adversaries run malicious code using scripting languages or command-line interfaces. |

| Impact | Denial of ML Service | Adversaries attempt to disrupt the availability of the AI system. |

NIST’s AI Risk Management Framework: Taking a Big-Picture Approach to Building Trustworthy AI

Developed under the National Artificial Intelligence Initiative Act, the NIST Artificial Intelligence Risk Management Framework (AI RMF) offers voluntary, non-sector-specific guidance for organisations seeking to integrate trustworthiness considerations throughout the entire AI system lifecycle. At its core, the framework is designed to help people, businesses, and society as a whole navigate and manage the risks that come with using AI systems.

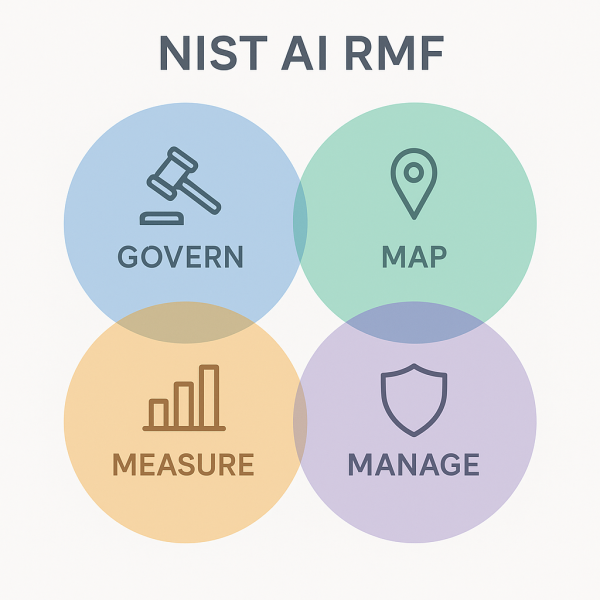

At its heart, the AI RMF breaks down into four main functions. First, there’s GOVERN, which is all about setting up policies, defining roles, and making sure there’s accountability for AI across the organisation. Next comes MAP, where you take stock of your AI assets, figure out the system’s boundaries, and identify possible risk scenarios. MEASURE is the step where you dig into the numbers—using performance metrics, bias checks, and stress tests to quantify your risk exposure. Finally, MANAGE covers the hands-on work of choosing, putting in place, and keeping an eye on the right strategies and controls to keep those risks in check. NIST highlights six key pillars for building trustworthy AI—robustness, reliability, fairness, explainability, privacy, and security—each woven into the framework’s core activities. In late 2024, NIST rolled out an AI RMF profile designed just for generative AI—tackling challenges like content authenticity, hallucinations, and model misuse. With this update, the core framework now covers the new risks that come with GenAI technologies.

NIST’s AI Risk Management Framework gives organisations a flexible, all-encompassing toolkit to tackle the many risks that come with AI—while keeping trust, transparency, and responsible development front and centre at every stage of the process. Because it’s both voluntary and not tied to any one industry, the framework gives all sorts of organisations the freedom to adapt it to their unique needs. These four core functions offer a clear, organised way to tackle risk management throughout the entire AI lifecycle. By emphasising trustworthy AI traits, the framework goes beyond just security and addresses a wider range of important considerations.

Table: NIST AI RMF Core Functions and Key Activities

| Core Function | Primary Objectives | Key Activities |

|---|---|---|

| GOVERN | Establish AI governance and accountability. | Develop policies, define roles, ensure oversight. |

| MAP | Identify AI assets and potential risks. | Define system boundaries, categorise risks, assess impacts. |

| MEASURE | Quantify risk exposure. | Implement metrics, conduct bias audits, perform stress testing. |

| MANAGE | Implement and monitor risk mitigation strategies. | Select controls, monitor effectiveness, respond to incidents. |

Strength in Synergy: How These Frameworks Work Together to Create Truly Comprehensive AI Security

Each framework—OWASP Top 10, MITRE ATLAS, and NIST AI RMF—brings something unique to the table, but it’s when you use them together that their real value shines through, offering a well-rounded and thorough approach to keeping AI systems secure. For developers, the OWASP Top 10 serves as a hands-on, regularly refreshed checklist—making it easier to spot and fix the most common vulnerabilities lurking in LLM applications right at the code level. Thanks to its adversary-centric perspective, MITRE ATLAS gives security teams a window into how real-world attackers operate—their tactics, their tricks, and the methods they use to breach AI systems. Armed with this insight, defenders can rehearse likely attack scenarios and shore up their defences before trouble strikes. Finally, the NIST AI RMF steps back to look at the bigger picture, helping leaders and risk managers weave AI security and trustworthiness directly into the day-to-day workings and core policies of their organisations.

When organisations bring all three frameworks together, they lay the groundwork for a layered, deeply resilient defence around their AI systems. In practice, this means building AI models and applications with OWASP’s security guidelines in mind, putting them through their paces with adversarial tests inspired by MITRE ATLAS, and maintaining strong governance and risk oversight by following the principles set out in the NIST AI RMF. By taking this combined approach, organisations can tackle AI security from every angle—covering the full lifecycle of their systems and reaching every level of the business.

Looking Ahead: How cloudsineAI Can Help You Build a Safer, More Trustworthy AI Future

At cloudsineAI, the mission is simple: help organisations cut through the maze of AI security so they can roll out cutting-edge, reliable generative AI applications with confidence. With a deep understanding of the unique risks that come with LLMs, cloudsineAI has developed WebOrion® Protector Plus, a GenAI firewall, which is carefully crafted to meet the standards set by the OWASP Top 10, MITRE ATLAS, and NIST AI RMF frameworks.

cloudsineAI takes a hands-on approach, delivering real-world products designed to help organisations put top AI security framework recommendations into action. By zeroing in on the most pressing vulnerabilities flagged by OWASP and adopting proactive defences in line with MITRE ATLAS, they give organisations real, practical ways to boost the security of their generative AI systems—perfectly in step with the broader principles outlined by the NIST AI RMF.

Conclusion

In today’s fast-changing digital world, keeping AI systems secure isn’t just a one-and-done task—it requires a thorough, multi-layered strategy that covers every stage of development and deployment. That means going beyond just fixing issues in the code—you also need to anticipate how attackers might try to exploit your systems and put strong governance structures in place across the organisation. When organisations bring together the hands-on guidance from the OWASP Top 10 for LLM Applications, the attacker’s-eye view offered by MITRE ATLAS, and the broad risk management approach of the NIST AI RMF, they give themselves the best shot at creating AI systems that aren’t just cutting-edge, but also secure and dependable. At cloudsineAI, we’re committed to being more than just a vendor—we’re your partner in the ongoing challenge of AI security. With a blend of specialised tools and genuine know-how, we help organisations put top frameworks into action and protect their AI-powered applications from an increasingly complex array of threats.

Are you ready to strengthen the defences of your generative AI applications? Contact us today!