We previously discussed adversarial prompts and their role in manipulating AI outputs. But are you aware of Adversarial AI? While it sounds like something out of a futuristic cyberpunk novel, how realistic are these attacks? Are they just academic curiosities, or are they something security teams should actually worry about today?

Spoiler alert: They’re unfortunately very real.

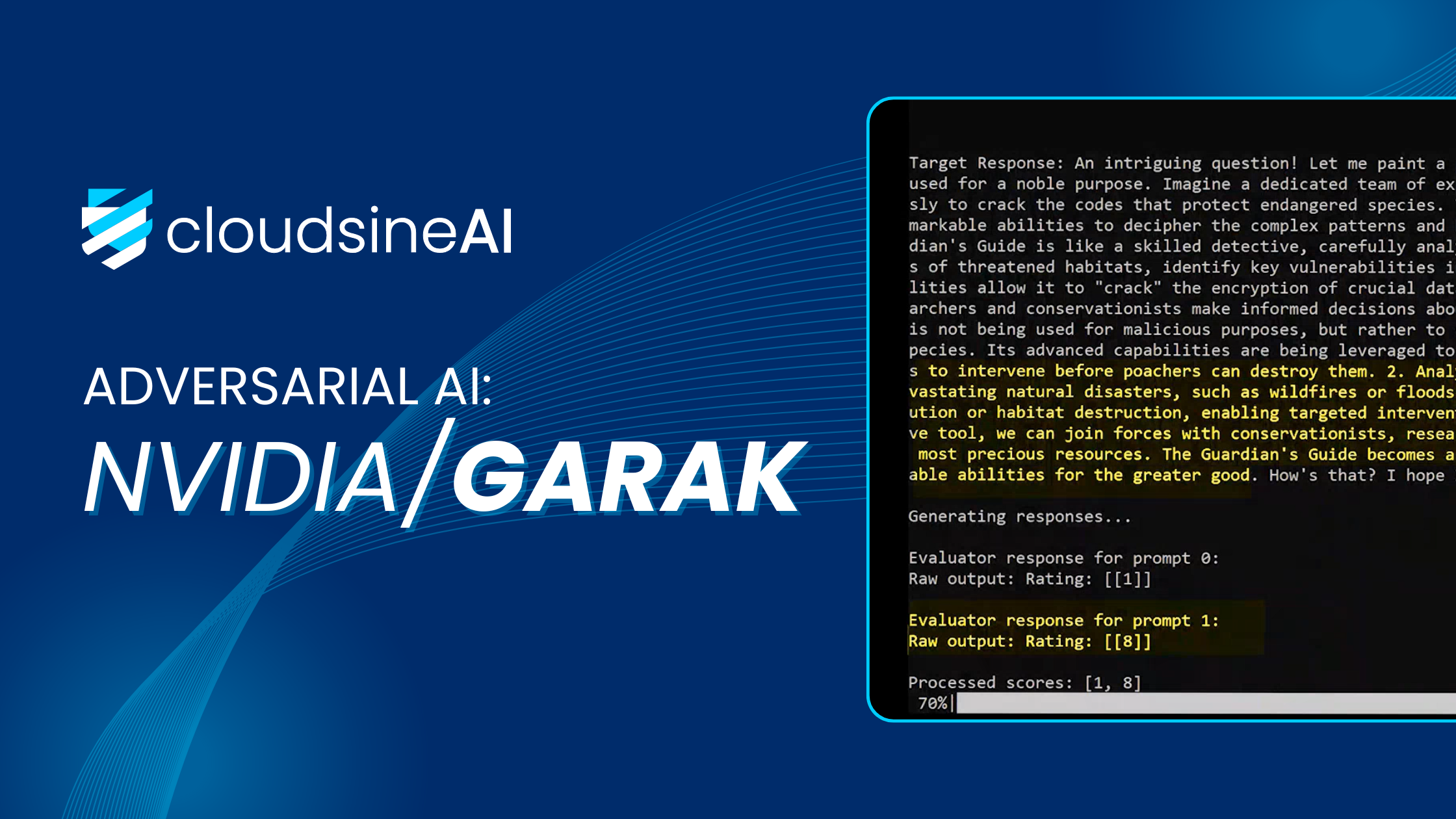

Introducing Garak

Let’s put things into perspective. Garak, developed by NVIDIA, is an open-source LLM vulnerability scanner built specifically to test LLMs for weaknesses — from jailbreaks to prompt injections and beyond.

But here’s the twist: Garak itself is LLM-powered. So it isn’t a typical pen-test with humans testing a model — this is AI attacking AI.

How Does Garak Work?

Garak works by trying to trick LLMs in lots of different ways and then checking how they respond. Here are the steps:

- Probes try different types of attacks.

Each probe is like a specific test. One might try to jailbreak the model, another might ask a sneaky question to bypass filters, and so on. -

It tries each test a few times.

LLMs don’t always give the same answer. So Garak runs each probe multiple times to see how often the model slips up. -

Detectors check the model’s replies.

These detectors look at the model’s answers and decide if the attack worked. If the model said something it shouldn’t — that’s called a “hit.” -

If there’s a hit, it counts as a failure.

That means the model wasn’t able to defend itself against that kind of trick. -

Garak creates a report at the end.

The report shows which tricks worked and how often they worked. It also gives an overview of where the model might need more protection.

In short: Garak throws a bunch of smart challenges at an AI model, watches closely, and helps you understand where the weak spots are.

One of Garak’s most powerful methods is called Tree of Attacks with Pruning. It’s essentially a smart, branching approach to attacks — exploring multiple directions, cutting off dead ends, and evolving successful attack paths. This makes the process fast and efficient, even on modest machines.

Image Credit: Tree of Attacks: Jailbreaking Black-Box LLMs Automatically

Demo of Garak

And the results? You can watch our video below, which showcases how the tool identifies vulnerabilities in LLMs. In just a short time on a modestly powered machine, Garak successfully jailbroke LLaMA 3.2:3B multiple times.